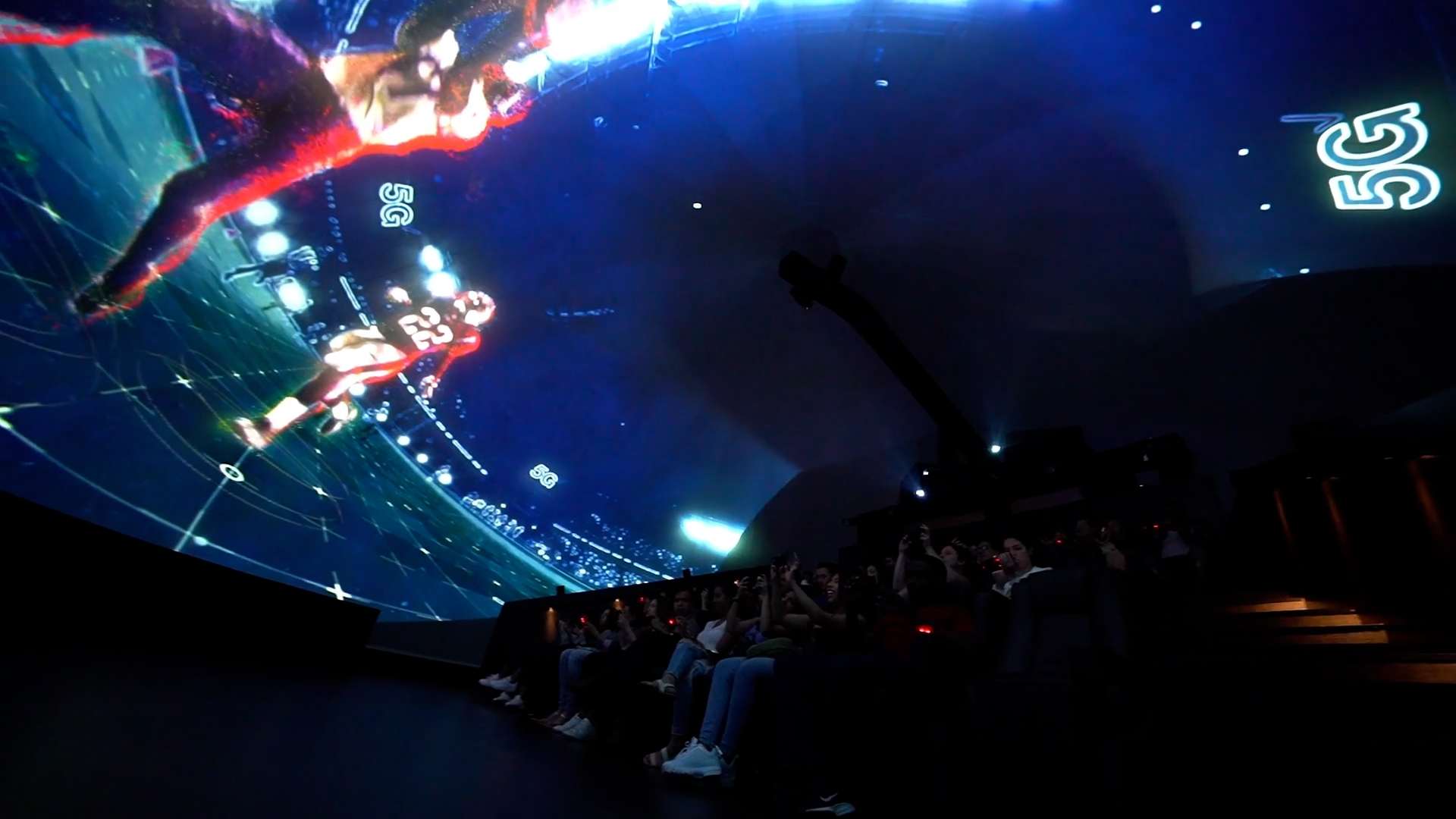

To showcase the future of 5G and its impact on the NFL, we partnered with Momentum Worldwide & Verizon to create a unique interactive activation in Miami's Bayfront Park for Super Bowl LIV.

The activation synced dome-projected content with a Unity app running on fifty 5G phones within the space. NFL fans were able to experience football in a way never seen before as the app put the power of 5G into their hands, displaying how augmented reality, volumetric replays and 4K multi-camera views will change the future of the sport.

The Shoot

In mid-December, after weeks of pre-production, we set off to Miami's Hard Rock Stadium with high ambitions. Our goal was to take guests on a ride that descended down from the heavens and placed them right in the middle of the action.

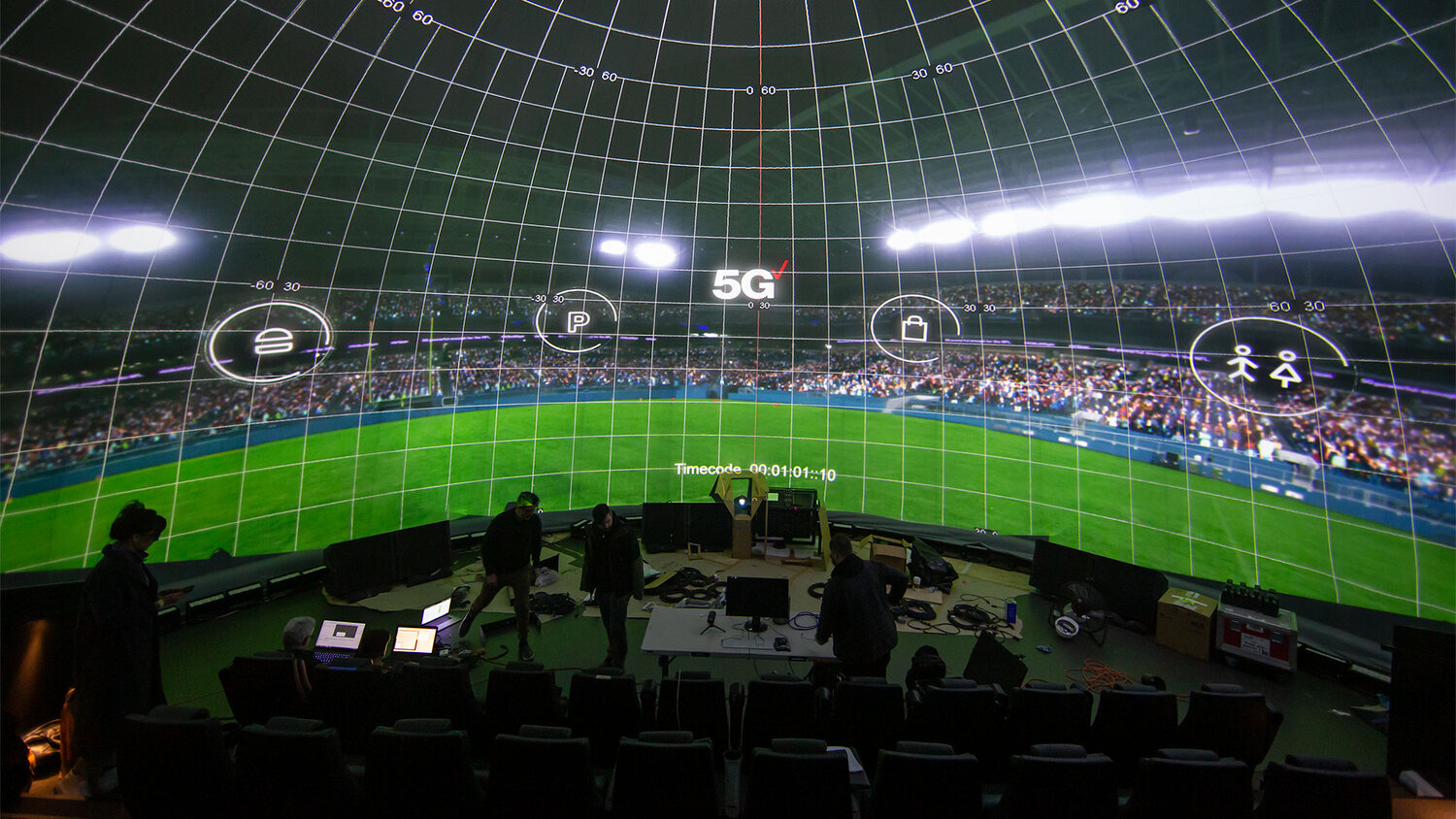

Shooting on the RED Monstro with the Entinya HAL 250, we were able to Shoot in 8K with a field of view above 220 degrees. This setup allowed us to get inches away from the players, making them appear as giants, but unlike a 360 camera, eliminate all stitching issues.

This setup allowed us to get inches away from the players, making them appear as giants, but unlike a 360 camera, eliminate all stitching issues. Adding to the sensory experience, we wanted to lean on one of the strengths of dome projections: their ability to simulate motion. To do so, we incorporated a drone rig to fly the drone in and out of the stadium, as well as hooked our camera onto a Techno-crane, allowing for smooth yet dynamic moves during gameplay.

The Pipeline

Going into the project, we knew we needed to develop a flexible pipeline that allowed us to adjust the tilt of our final output as well as give our team a straightforward compositing and design canvas to work off of.

To do so, we developed a solution that allowed us to convert the fisheye image out of the camera to 2:1 latlong and then back again to our final 4K by 4K output. Our setup gave us full control over the degree of tilt, as well as the ability to push back and zoom into each shot with our extend 220 FOV.

VFX + Design

With this pipeline in place, the team set out to tackle a long list of tasks, the first of which was bringing a crowd to an empty stadium. While we would have loved to have done a full 3D crowd replacement, the timeline and budget didn't allow for it. As a pivot, we implemented a stylized look, tracking lights, and particle FX to the stands, referencing the look of cell phones around the stadium.

The result was solid. In addition to the crowds and clean-up, we implemented a series of scans that wiped over the scene, each indicating an interactive moment on the phone. We also added graphic treatments on top of our intro and outro drone scenes, flying through a countdown as you enter the stadium and flying above the stratosphere as you exit.

Mobile Experience

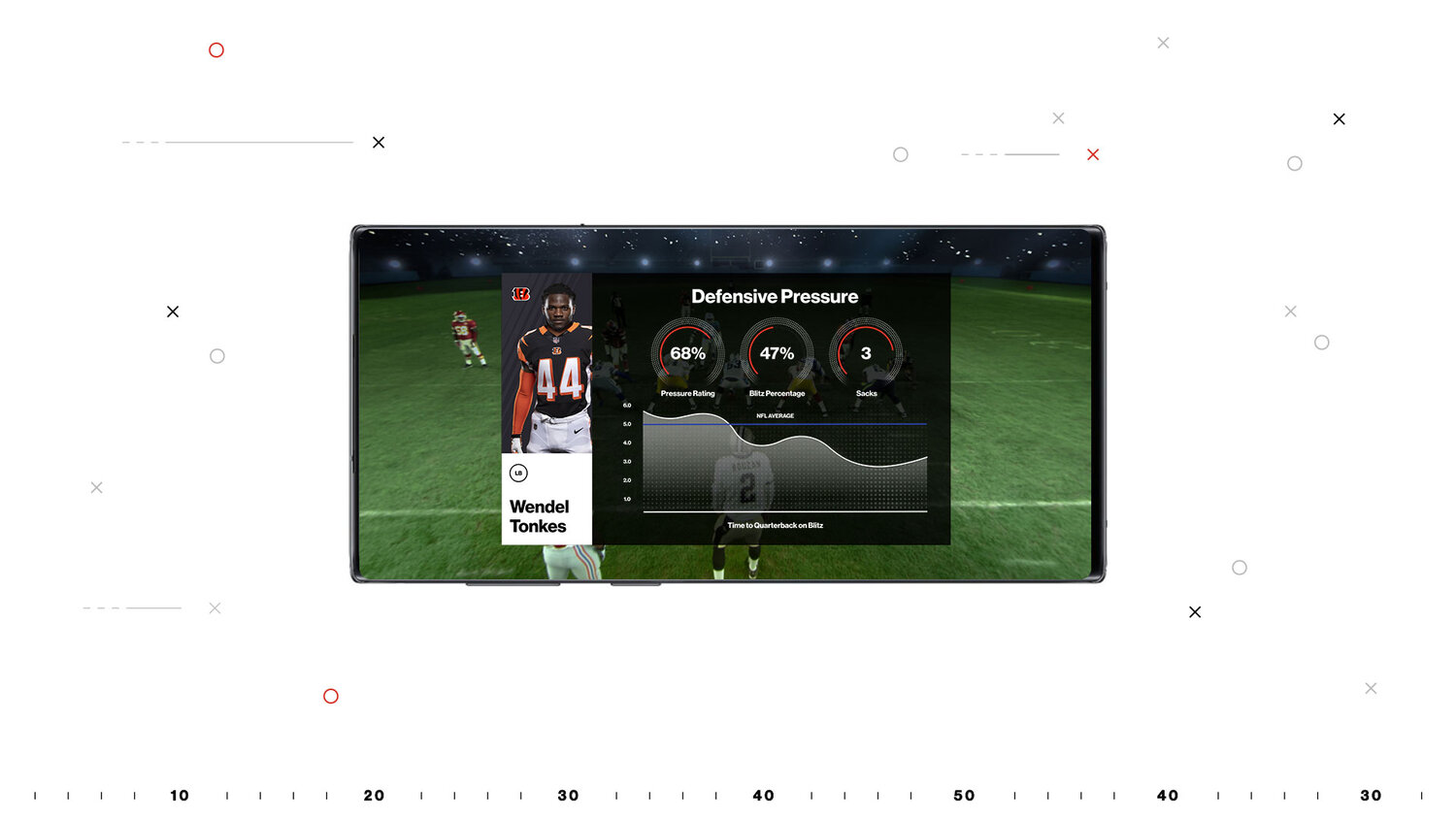

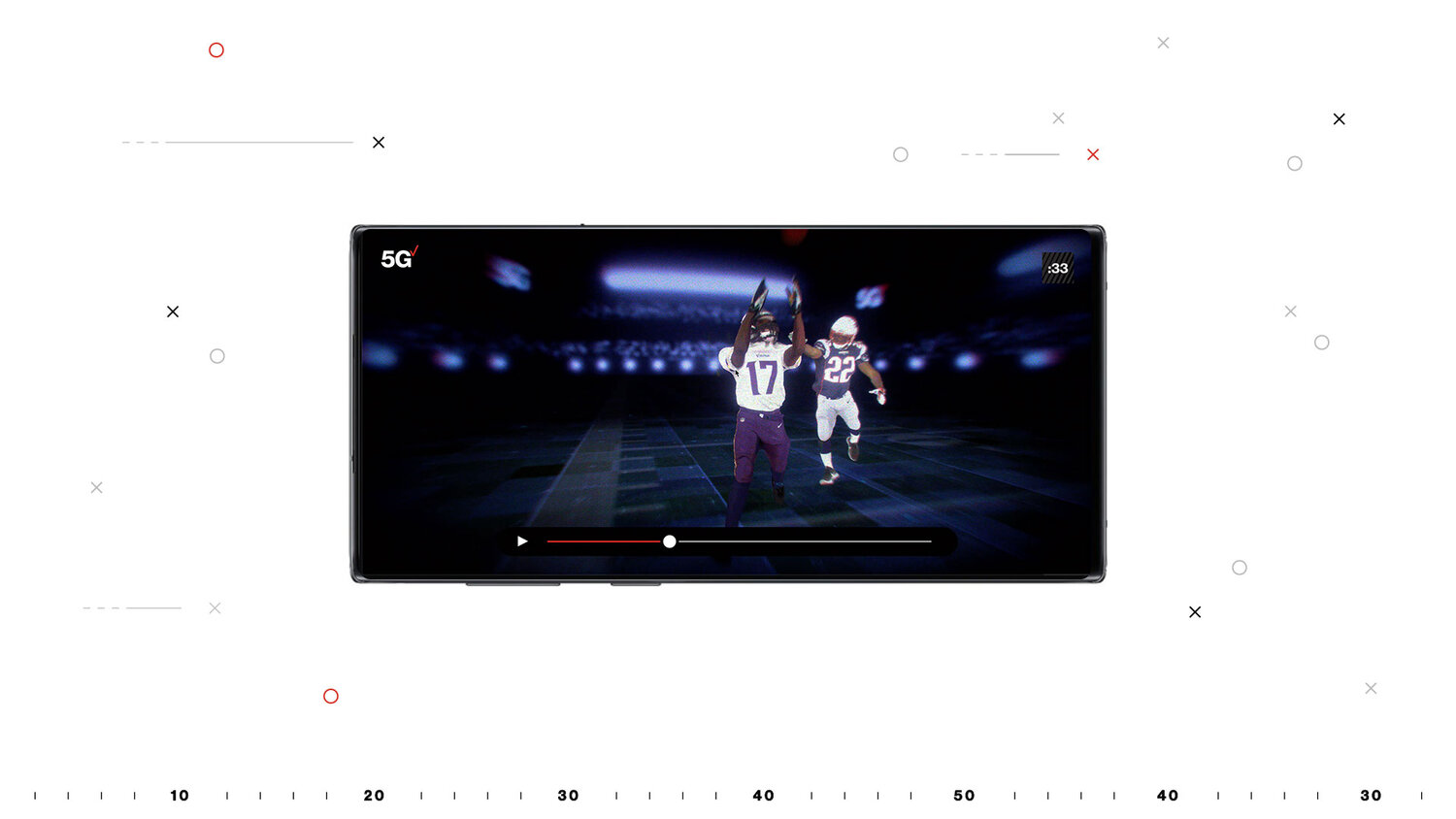

In addition to the projected dome content, there was an additional layer of interactivity to the experience that took place on fifty synced mobile devices in each viewer's seat. These 5G phones allowed users to engage with the dome content in multiple ways, including augmented reality wayfinding and player stats, multi-camera viewing, and 3D volumetric replays.

The key challenges we aimed to tackle here from a user interaction perspective were:

• When should people be using the mobile devices during the experience?

• How does it relate to or inform the content on the dome at the time?

• How do we make the relationship between the dome and the phones feel cohesive and natural?

Since Verizon wanted to flex multiple features of 5G during the experience that each required different device functionality, we worked with the content team toward a phone experience in stages by creating designated interaction windows that periodically occurred through the entire content piece.

During testing, we figured out how long users would need to comfortably engage with these interaction windows, accounting for both on-boarding for new, unique interactions and exploration in each stage.

Keeping this interactive relationship clear and intuitive required a multifaceted approach including:

• Introductory cues and hotspots displayed on the dome projection

• Instructional language in the voiceover for the overall experience to drive it home

• Overlaying UI elements and supporting instructions on the phones in sync with the dome content

Tech Approach

As an integral part of the experience, we synced fifty mobile devices to our dome content while seamlessly integrating multiple real-world 5G capabilities on each device with total user control. To sync our devices, we took in an LTC encoded audio feed from the AV system and decoded the signal with a custom application in openFrameworks.

The signal was passed through a custom-built node server that communicates with each device over web sockets, achieving an extremely precise and stable end output.

We developed an impressive approach to AR given an extremely challenging environment. Low light, moving images, and a warped surface are everything you don't want for AR.

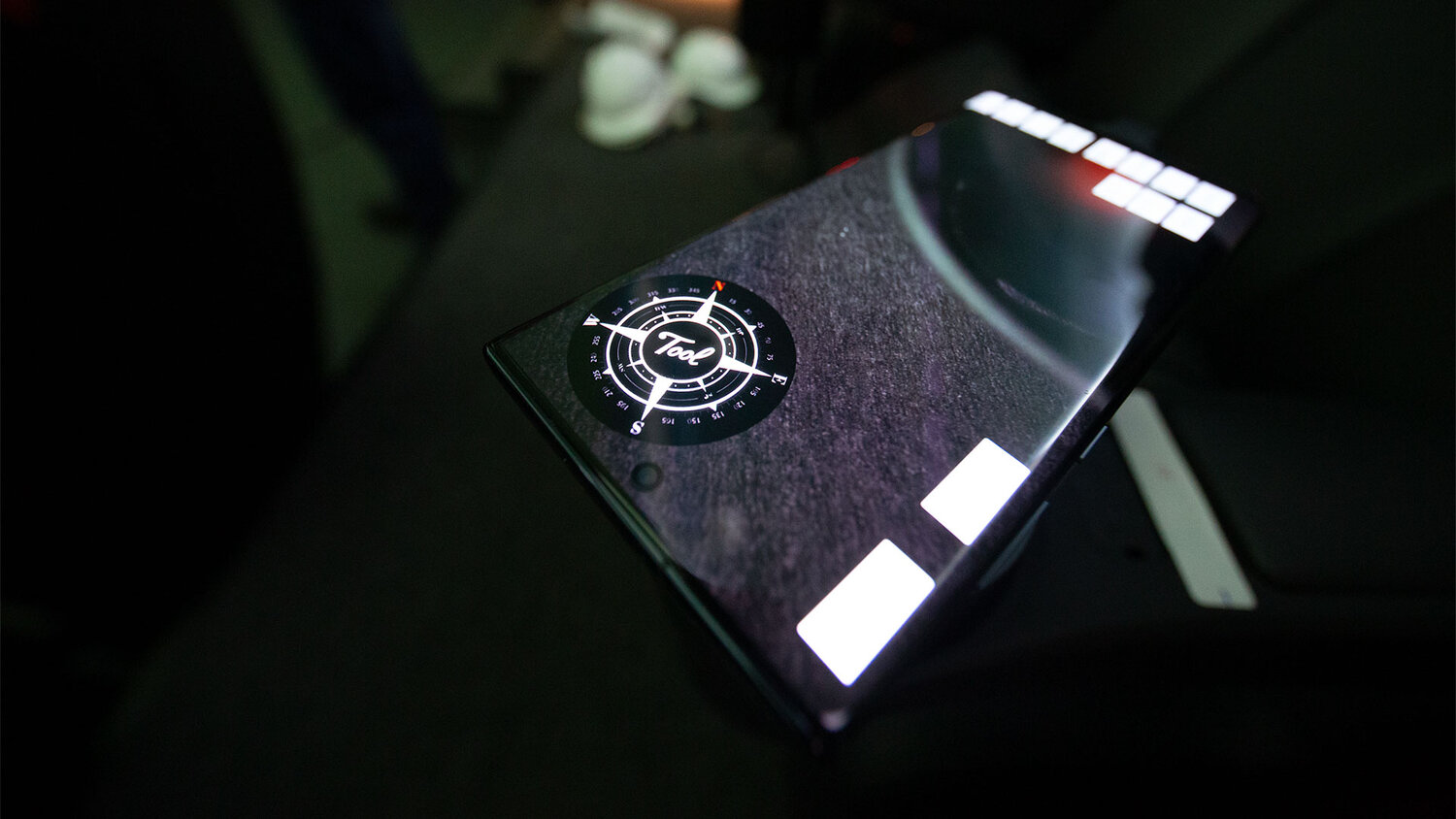

To compensate for this, we used multiple inputs to orient each device. Here, we used compass data and Rotation data from ARCore (3DOF) as well as a CAD model of our dome, matching each of our real-world seat positions to their corresponding location in our virtual environment.

Prototyping

We needed the AR tracking to be as precise as possible because of the challenging environment, so we built multiple prototypes in the early stages of the project to make sure our final approach was bulletproof and ensure that the tracking was on point.

One of the early considerations was using AR Marker embedded on the content, but after prototyping it, we realized that the warp and deformation of the content would interfere with the perspective of the AR content, breaking the experience for the seats closer to the screen.

3DOF vs 6DOF was the main approach to test. For the prototype, we used 3 different approaches. 6DOS + gyroscope data worked when there was static content on the projection, but the motion of "pick up your phone” and the darkness of the dome caused the content to drift a lot. 3DOS + gyroscope seemed to be the best approach, but the gyroscope data was too noisy and unstable, even after filtering this data. We ended up going a simpler route, using only 3DOF and compass data to refine the AR tracking and give a true north to the app that we could monitor and tweak from the backend.

• 3D models of the real-world space, each seat has a corresponding virtual position.

• Compass data to give the app a true north. This way, the phone always knows where the content is

• Control Backend to monitor and calibrate phones on the go between sessions, without the need of new builds.